What is Requesty LLM Gateway?

Requesty is an LLM Gateway that functions as an intelligent middleware for all your LLM needs. Integrate with 200+ LLM providers by changing 1 value: your base URL. Use a single API key to access all the providers and forget about top-ups and rate limits.

The moment you switch the base URL, you get:

- Tracing: See all your LLM inference calls without changing anything in your code

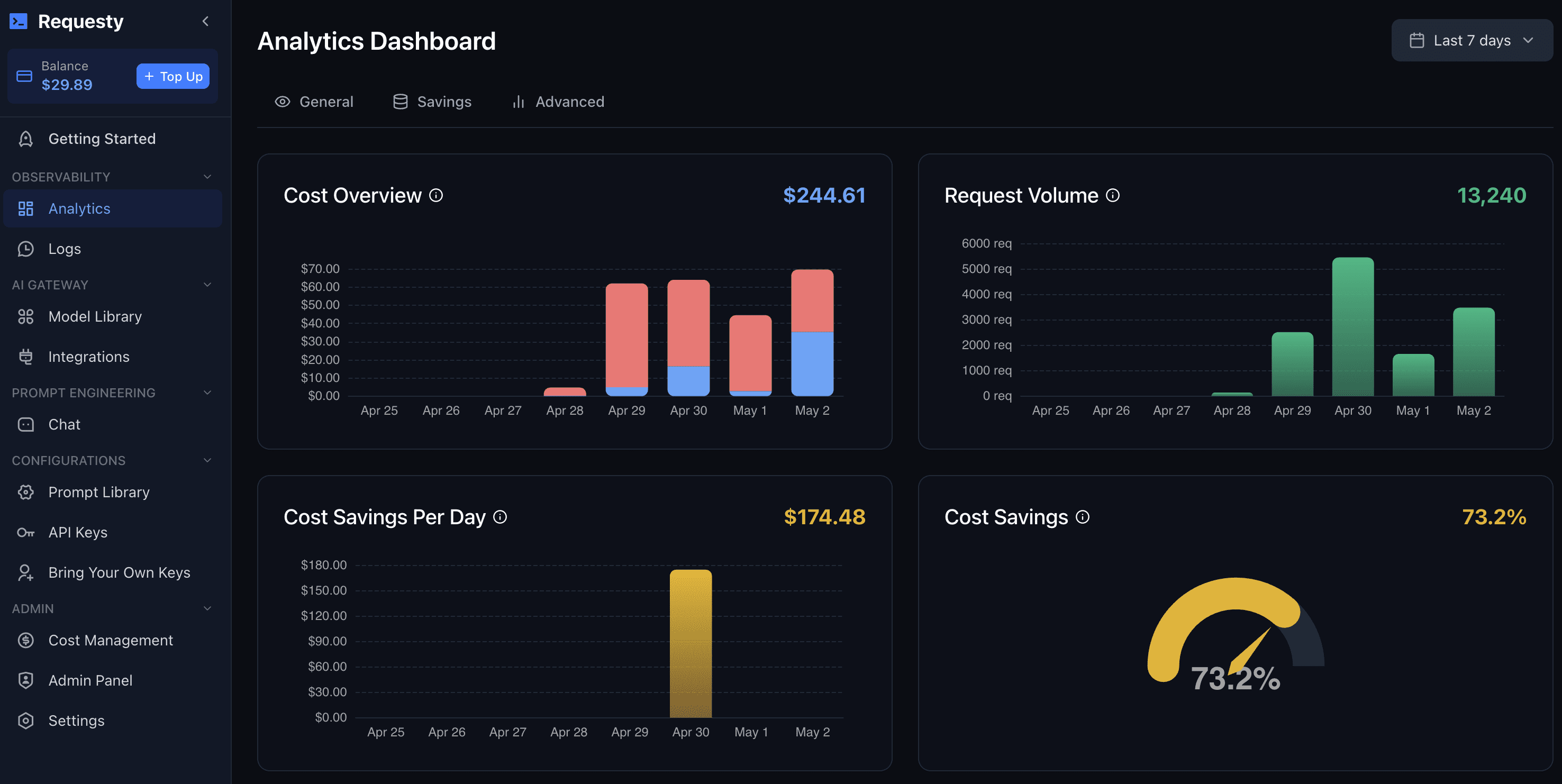

- Telemetry: See latency, request counts, caching rates and more without changing anything in your code

- Billing: See exactly how much you spend with every provider and for every use case

- Data Security: Protect your PII and company secrets by masking them before they hit the LLM provider

- Privacy: Restrict usage to providers in a specific region

- Smart Routing: Route requests based on Requesty's smart routing classification model, saving cost and improving performance

Use Cases and Features

1. Access 200+ models using a single API key without any rate-limits

2. Get OpenAI compatible access to all LLM providers

3. Get aggregated tracing, telemetry and billing for all your LLM inference calls

Log in

Log in