Deepseek R1 vs GPT o1-preview

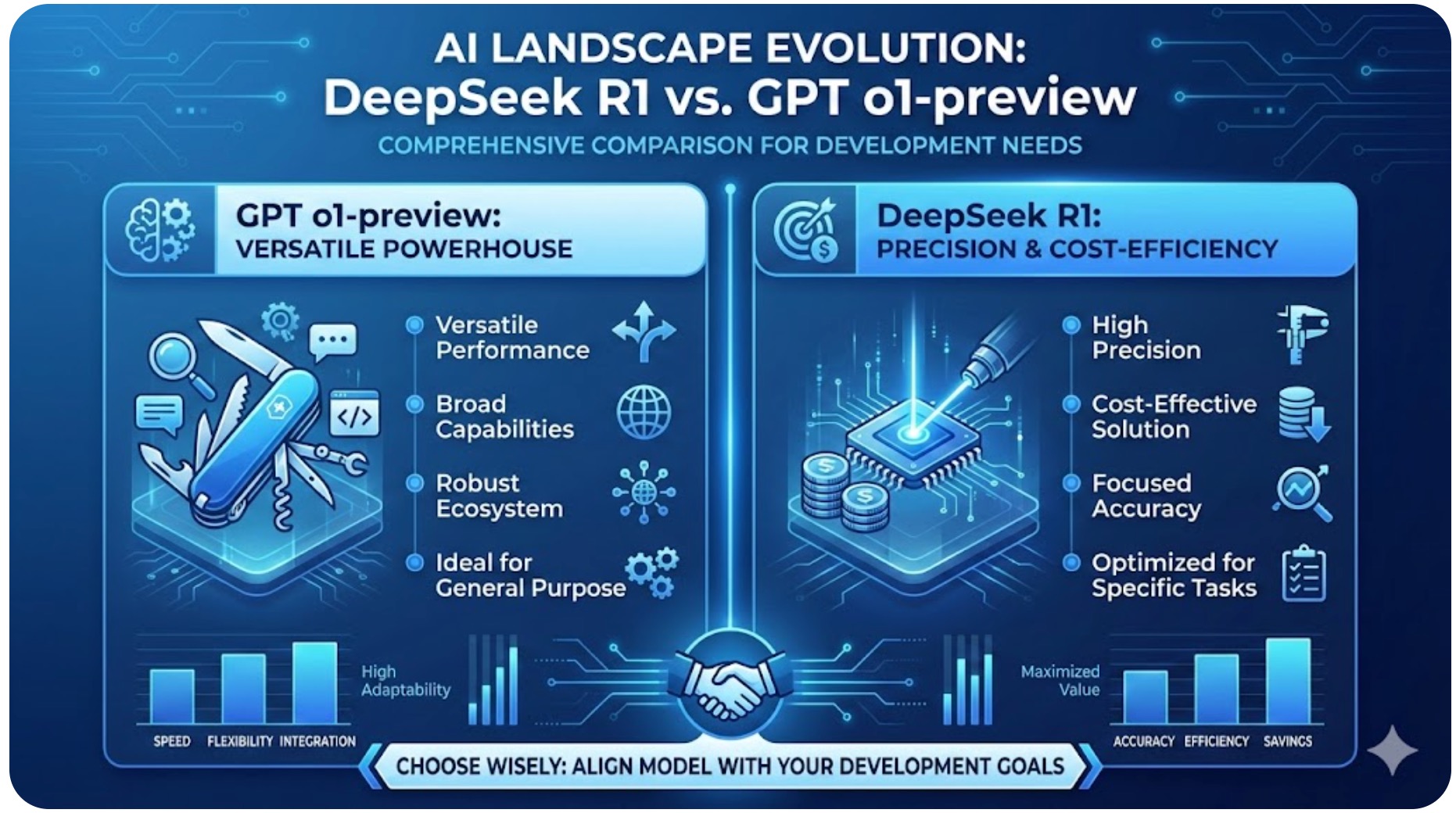

The AI landscape is evolving rapidly with the introduction of DeepSeek R1, a model emphasizing precision and cost-efficiency, and GPT o1-preview, OpenAI's versatile powerhouse. This comprehensive comparison explores their specifications, benchmarks, and real-world performance to help you decide which model aligns best with your development needs.

1. Technical Specifications Comparison

While both models support a massive 128K input context window, they diverge significantly in output capacity and processing speed.

| Specification | GPT o1-preview | DeepSeek R1 |

|---|---|---|

| Input Context | 128K | 128K |

| Max Output Tokens | 65K | 8K |

| Parameters | Undisclosed | 671B |

| Speed (Tokens/sec) | 144 | 37.2 |

| Knowledge Cutoff | Oct 2023 | Unspecified (Newer) |

2. Performance Benchmarks

Combining official release notes and open benchmarks, here is how they stack up in specialized domains:

| Category | Benchmark | GPT o1-preview | DeepSeek R1 |

|---|---|---|---|

| Math | MATH-500 | 92 | 97.3 |

| Reasoning | GPQA | 67 | 71.5 |

| Coding | Human Eval | 96 | 96.3 |

| Cybersecurity | CTFs | 43.0 | - |

3. Real-World Practical Tests

Benchmarks are useful, but real-world prompts reveal the true "personality" and reliability of an AI. We tested both models across five critical areas.

4. Pricing: The Cost Efficiency Gap

One of the most shocking differences lies in the cost structure. DeepSeek offers a significantly more affordable solution for high-volume tasks.

| Price (Per 1k Tokens) | GPT o1-preview | DeepSeek R1 |

|---|---|---|

| Input Price | $0.01575 | $0.00061 |

| Output Price | $0.06300 | $0.00241 |

Final Verdict

🏆 When to choose GPT o1-preview

- Creative Writing: Produces rich, detailed content and storytelling.

- Web Development: More reliable for generating bug-free HTML/CSS layouts.

- Cybersecurity: Better established performance in CTF challenges.

🏆 When to choose DeepSeek R1

- Math & Logic: Outperforms GPT in complex reasoning and calculation tasks.

- Cost Efficiency: Drastically cheaper, making it ideal for scaling applications.

- Memory Efficiency: Generates highly optimized code for backend logic.

Frequently Asked Questions (FAQ)

Both are excellent. GPT o1-preview often produces cleaner code for beginners and better web frontend designs. DeepSeek R1 excels at backend logic and memory optimization.

DeepSeek R1 is not free, but it is significantly cheaper than OpenAI's models. Its input cost is approximately 96% lower than GPT o1-preview.

Benchmarks show DeepSeek R1 scoring 97.3 on MATH-500 compared to GPT's 92. Its architecture appears more attuned to step-by-step logical verification, reducing hallucination in calculations.

Log in

Log in