const { OpenAI } = require('openai');

const api = new OpenAI({

baseURL: 'https://api.ai.cc/v1',

apiKey: '',

});

const main = async () => {

const result = await api.chat.completions.create({

model: 'deepseek/deepseek-non-reasoner-v3.1-terminus',

messages: [

{

role: 'system',

content: 'You are an AI assistant who knows everything.',

},

{

role: 'user',

content: 'Tell me, why is the sky blue?'

}

],

});

const message = result.choices[0].message.content;

console.log(`Assistant: ${message}`);

};

main();

import os

from openai import OpenAI

client = OpenAI(

base_url="https://api.ai.cc/v1",

api_key="",

)

response = client.chat.completions.create(

model="deepseek/deepseek-non-reasoner-v3.1-terminus",

messages=[

{

"role": "system",

"content": "You are an AI assistant who knows everything.",

},

{

"role": "user",

"content": "Tell me, why is the sky blue?"

},

],

)

message = response.choices[0].message.content

print(f"Assistant: {message}")

Product Detail

✨ DeepSeek V3.1 Chat is a specialized hybrid conversational AI model focused on fast, direct responses without engaging in complex reasoning. Building on the DeepSeek V3.1 architecture, this variant omits the thinking mode and prioritizes low-latency outputs while maintaining strong multimodal capabilities. It is optimized for applications requiring efficient, straightforward interactions across chat, code generation, and agent workflows, suited for developers and enterprises valuing rapid response times and streamlined task execution.

Technical Specifications

- Context window up to 128K tokens to support extended multi-turn conversations and code sessions.

- Output token limit up to 8,000 tokens optimized for concise, coherent answers.

- Hybrid transformer architecture with Mixture of Experts (MoE) layers for efficient compute allocation.

- Supports structured tool calls, code agent functionality, and search agents to enhance task flexibility.

- Extended training on long context datasets using FP8 microscaling for inference efficiency.

- Multilingual support across 100+ languages with high contextual accuracy.

Key Features

🚀 DeepSeek V3.1 Chat operates exclusively in a non-thinking mode that delivers fast, direct answers without engaging in multi-step reasoning. This configuration allows for extremely low-latency interactions well-suited to straightforward queries and task executions. The model retains enhanced tool and agent calling capabilities, enabling smooth integration with code agents and search agents for versatile workflows. Built on an optimized MoE transformer architecture, it achieves efficient resource use while supporting structured function calls for precise tool invocation. While it does not perform deep reasoning, it is ideal for multimodal chat, rapid code generation, and streamlined agentic workflows requiring fast, reliable output.

Performance and Capabilities

- ⚡ Rapid response times with optimized tool utilization and minimal computational overhead.

- High accuracy in direct code generation and task execution with low error rates.

- Effective handling of multimodal inputs including text and images focused on non-reasoning tasks.

- Scalable across software engineering, research assistance, and agentic application environments.

Use Cases

- Fast software engineering assistance including code synthesis and debugging.

- Multimodal chatbots focusing on image and text understanding with prompt responses.

- Routine research and document analysis with moderate context requirements.

- Efficient multi-turn dialogue suited for basic task-oriented educational tools.

- Business intelligence processes emphasizing rapid visual data interpretation.

- Agentic applications requiring intelligent tool calls without deep reasoning overhead.

API Pricing

• 1M input tokens: $0.294

• 1M output tokens: $0.441

Code Sample

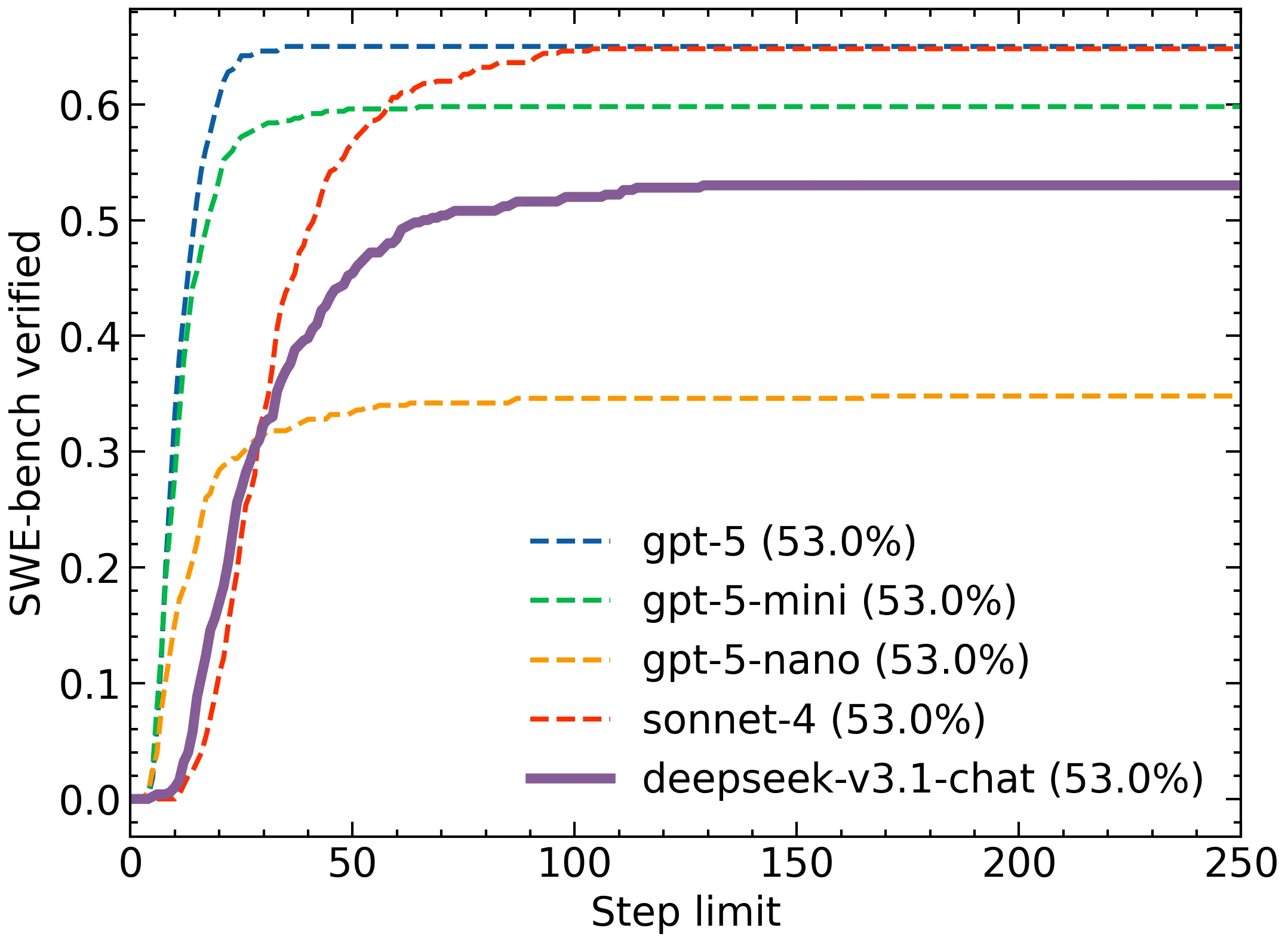

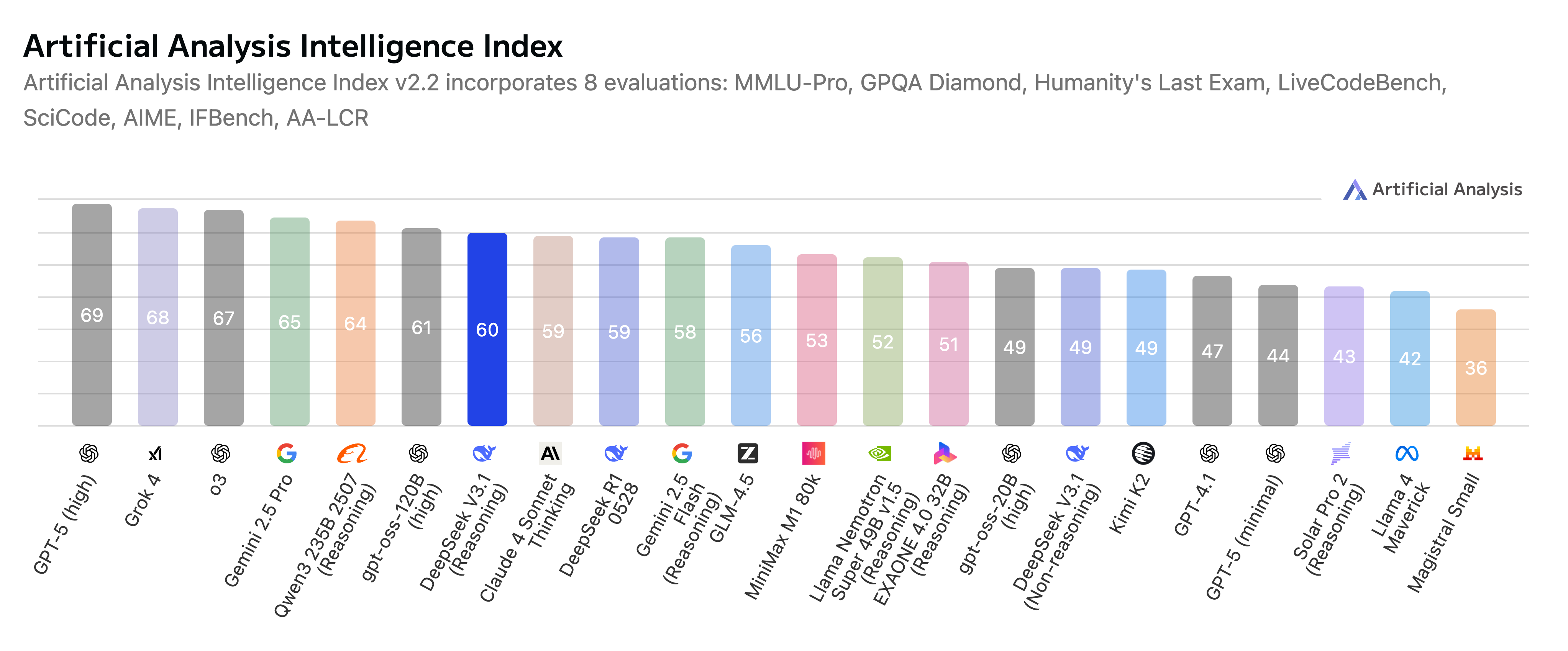

Comparison with Other Models

DeepSeek V3.1 Chat provides an optimal balance of high-speed inference, strong multimodal integration, and cost efficiency for use cases where deep reasoning is unnecessary. It is engineered for developers and enterprises prioritizing speed and streamlined task execution in multimodal conversational AI and agent workflows.

💡 vs GPT-5: While GPT-5 boasts a much larger 400K token context window and includes emerging audio/video modalities alongside text and image capabilities, DeepSeek V3.1 Chat excels with deeper integration of visual context manipulation, dynamic expert modularity for efficient compute, and advanced domain adaptation tools. DeepSeek offers a strong open-weight model approach with cost advantages and specializes in complex image reasoning and multimodal fusion, whereas GPT-5 leads in sheer context scale and multimodal breadth with enterprise ecosystem integration.

💡 vs DeepSeek V3: The new version improves inference speed by approximately 30%, expands the context window from 128K to a more flexible scale optimized for chat tasks, and significantly enhances multimodal alignment accuracy. These improvements enable better reasoning, especially in low-resource languages and complex visual scenarios, making it more capable for advanced conversational AI and large-scale code understanding.

💡 vs OpenAI GPT-4.1: Compared to GPT-4.1's code-optimized and text-centric architecture, DeepSeek V3.1 balances large-scale multimodal inputs with a sophisticated Mixture of Experts training regime. This balance yields superior visual-textual coherence and faster adaptation across diverse multimodal tasks, making DeepSeek especially suited for workflows that require seamless integration of text and images with advanced reasoning.

Frequently Asked Questions (FAQ)

What is the core purpose of DeepSeek V3.1 Chat?

DeepSeek V3.1 Chat is designed for fast, direct conversational AI, prioritizing low-latency responses for straightforward queries and task execution, rather than complex, multi-step reasoning.

How does DeepSeek V3.1 Chat achieve rapid response times?

The model operates exclusively in a "non-thinking" mode, omitting multi-step reasoning to deliver immediate outputs. Its optimized Mixture of Experts (MoE) transformer architecture also contributes to efficient resource use and speed.

What multimodal capabilities does DeepSeek V3.1 Chat offer?

It maintains strong multimodal capabilities, supporting efficient interactions across text and images for tasks like multimodal chat and rapid code generation, even without deep reasoning functionality.

In which scenarios is DeepSeek V3.1 Chat most effective?

It's optimized for applications requiring efficient, straightforward interactions in chat, code generation, and agent workflows, ideal for developers and enterprises valuing rapid response times and streamlined task execution.

AI Playground

Log in

Log in