const { OpenAI } = require('openai');

const api = new OpenAI({

baseURL: 'https://api.ai.cc/v1',

apiKey: '',

});

const main = async () => {

const result = await api.chat.completions.create({

model: 'zhipu/glm-4.5-air',

messages: [

{

role: 'system',

content: 'You are an AI assistant who knows everything.',

},

{

role: 'user',

content: 'Tell me, why is the sky blue?'

}

],

});

const message = result.choices[0].message.content;

console.log(`Assistant: ${message}`);

};

main();

import os

from openai import OpenAI

client = OpenAI(

base_url="https://api.ai.cc/v1",

api_key="",

)

response = client.chat.completions.create(

model="zhipu/glm-4.5-air",

messages=[

{

"role": "system",

"content": "You are an AI assistant who knows everything.",

},

{

"role": "user",

"content": "Tell me, why is the sky blue?"

},

],

)

message = response.choices[0].message.content

print(f"Assistant: {message}")

Product Detail

✨ Zhipu AI's GLM-4.5-Air stands out as a highly efficient and cost-effective large language model. Engineered with a advanced Mixture-of-Experts (MoE) design, it boasts 106 billion total parameters (with 12 billion active). Perfectly suited for a wide array of text-to-text applications, it mirrors the full GLM-4.5's impressive 128,000-token context window. This capability enables it to comprehend and generate exceptionally long-form text, all while dramatically reducing computational overhead.

Technical Specification

🚀 Performance Benchmarks

- Context Window: 128,000 tokens

- Ranked 6th overall on 12 industry benchmarks, achieving a 59.8 average score.

- Reasoning Prowess: Impressive scores on MMLU-Pro (81.4%), AIME24 (89.4%), and Math (98.1%), alongside solid coding capabilities.

📊 Performance Metrics for Agentic Applications

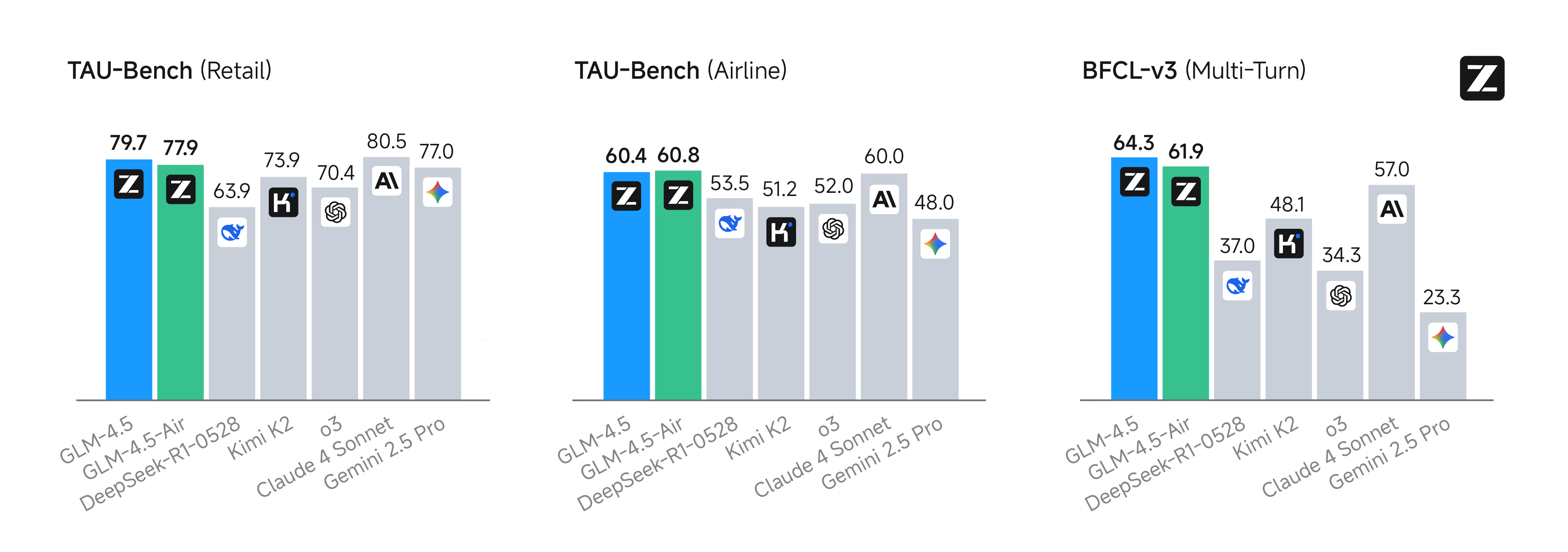

GLM-4.5-Air is purpose-built for agentic applications, featuring its robust 128,000-token context window and integrated function execution capabilities. On leading agentic benchmarks such as τ-bench and BFCL-v3, it achieves results nearly equivalent to Claude 4 Sonnet. Notably, in specialized web browsing tests (BrowseComp), which evaluate complex multi-step reasoning and tool use, GLM-4.5-Air demonstrates a 26.4% accuracy rate. This outperforms Claude-4-Opus (18.8%) and closely approaches the top-tier o4-mini-high at 28.3%. These figures highlight GLM-4.5-Air's balanced and strong performance in real-world, tool-driven tasks and agentic scenarios.

💡 Key Capabilities

- Advanced Text Generation: Produces fluent, contextually precise outputs suitable for long-form content and intricate multi-turn dialogues.

- Efficient Agentic Reasoning: Maintains robust coding, reasoning, and tool-use abilities in both "thinking" (complex problem-solving) and "non-thinking" (instant response) modes.

- Resource Efficiency: Requires significantly less GPU memory (deployable on 16GB GPUs), making it an excellent choice for real-world, hardware-constrained environments.

- Highly competitive for practical development and agent tasks, offering rapid code suggestion and detailed document analysis.

💲 API Pricing

- Input: $0.21

- Output: $1.155

Optimal Use Cases

- Cost-Effective Conversational AI: Ideal for high-volume, low-latency chatbots and virtual assistants.

- Lightweight Coding Assistance: Provides real-time code completion, debugging, and efficient documentation generation.

- Complex Document Analysis: Capable of analyzing legal, scientific, and business texts at scale.

- Mobile & Edge Deployments: Excels in environments with limited hardware resources.

- Agentic Tools: Powers tool-using agents, web browsing capabilities, and batch content transformation.

Code Sample

Comparison with Other Models

Vs. Claude 4 Sonnet: GLM-4.5-Air offers a competitive balance of efficiency and performance, though it slightly trails Claude 4 Sonnet in certain coding and agentic reasoning tasks. While Claude 4 Sonnet supports a larger context window (200k tokens vs. 128k) and includes image input capabilities (making it more suitable for multimodal applications), GLM-4.5-Air distinguishes itself by being open-source, more cost-effective, and providing strong reliability across function calling and multi-turn reasoning.

Vs. GLM-4.5: GLM-4.5-Air achieves approximately 80-98% of the flagship GLM-4.5’s performance, but with significantly fewer active parameters (12B vs. 32B) and reduced resource requirements. While it might slightly trail in raw task accuracy, it maintains solid reasoning, coding, and agentic capabilities, making it better suited for deployment in hardware-constrained environments.

Vs. Qwen3-Coder: GLM-4.5-Air competes effectively with Qwen3-Coder in coding and tool use, delivering fast and accurate code generation for complex programming tasks. GLM-4.5-Air demonstrates dominant success rates and reliable tool calling mechanisms over Qwen3-Coder.

Vs. Gemini 2.5 Pro: GLM-4.5-Air holds its own on practical reasoning and coding benchmarks against Gemini 2.5 Pro. While Gemini may slightly excel in some specific coding and reasoning tests, GLM-4.5-Air offers a favorable balance of a large context window and agentic tooling, optimized for efficient real-world deployments.

Limitations

- Slightly reduced overall performance and number of active parameters compared to the GLM-4.5 flagship model.

- Some complex tasks may show minor drops in performance, though core text and code abilities remain robust.

- Not ideal for organizations that prioritize absolute state-of-the-art accuracy above all other considerations.

- Optimal utilization of its "full" context and tool-support capabilities might require new infrastructure for best efficiency.

Frequently Asked Questions (FAQ)

❓ What is the core advantage of Zhipu AI’s GLM-4.5-Air?

✔️ GLM-4.5-Air’s primary advantage lies in its exceptional efficiency and cost-effectiveness, achieved through a Mixture-of-Experts (MoE) design with 12 billion active parameters, making it highly resource-friendly.

❓ What is the context window size of GLM-4.5-Air?

✔️ It features a substantial 128,000-token context window, allowing for the comprehension and generation of very long and complex texts.

❓ In which areas does GLM-4.5-Air show strong competitive performance?

✔️ It excels in agentic applications, web browsing (outperforming Claude-4-Opus), and offers strong performance in coding, reasoning, and tool use, especially in hardware-constrained settings.

❓ What are the optimal use cases for GLM-4.5-Air?

✔️ Ideal use cases include cost-effective conversational AI, lightweight coding assistance, complex document analysis, and deployments on mobile & edge devices.

❓ What are the main limitations of GLM-4.5-Air?

✔️ Its main limitations include slightly reduced overall performance compared to the flagship GLM-4.5, making it less suitable for scenarios demanding absolute state-of-the-art accuracy above all else.

AI Playground

Log in

Log in