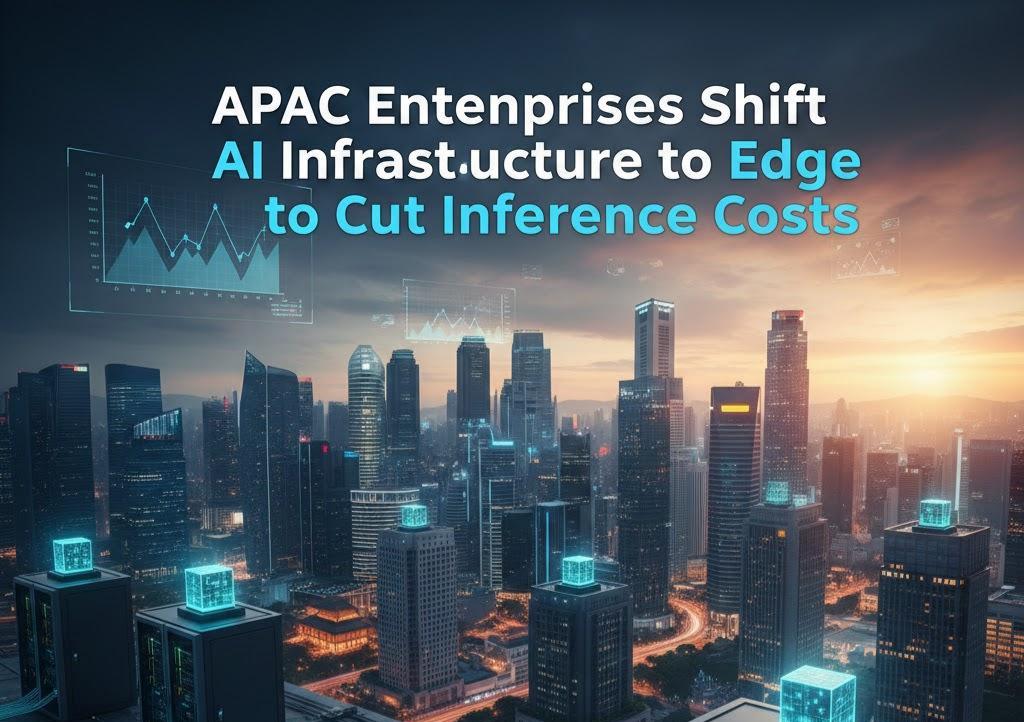

APAC Enterprises Shift AI Infrastructure to Edge to Cut Inference Costs

AI spending in the Asia Pacific (APAC) region is skyrocketing, yet many enterprises struggle to realize tangible ROI. The primary hurdle is infrastructure: existing systems are often not designed for the speed or scale required for modern AI inference. According to industry studies, high investment in GenAI tools frequently falls short of goals due to these architectural limitations.

This performance gap highlights the critical role of AI infrastructure in managing costs and scaling deployments. To address this, Akamai has introduced its Inference Cloud, powered by NVIDIA Blackwell GPUs, aiming to move decision-making closer to the user to eliminate latency and reduce overhead.

Closing the Gap Between Experimentation and Production

Jay Jenkins, CTO of Cloud Computing at Akamai, noted in an interview regarding the trend "APAC enterprises move AI infrastructure to edge as inference costs rise" that many initiatives fail because organizations underestimate the transition from pilot to production. Large infrastructure bills and high latency often stall progress.

While centralized clouds are standard, they become prohibitively expensive as usage grows, particularly in regions far from major data centers. "AI is only as powerful as the infrastructure it runs on," Jenkins emphasizes, noting that multi-cloud complexity and data compliance further complicate the landscape.

The Shift from AI Training to Real-Time Inference

As AI adoption matures across APAC, the focus is shifting from the occasional training of models to continuous inference. This daily operational demand consumes the most computing power, especially as language, vision, and multimodal models are rolled out across diverse markets. Centralized systems, never designed for such responsiveness, are becoming the main bottleneck.

Edge infrastructure optimizes this by:

- Reducing Data Distance: Shortening the travel path for data to ensure faster model responses.

- Lowering Costs: Avoiding heavy egress fees associated with routing data between distant cloud hubs.

- Enabling Real-Time Action: Supporting physical AI systems like robotics and autonomous machines that require millisecond decision-making.

Industry Adoption: Retail, Finance, and Beyond

Industries sensitive to delay are leading the move to the edge:

- Retail & E-commerce: Localized inference powers personalized recommendations and multimodal search, preventing user abandonment due to slow speeds.

- Finance: Fraud detection and payment approvals rely on rapid AI decision chains. Processing data locally helps firms maintain speed while keeping data within regulatory borders.

Building the Future: Agentic AI and Security

The next phase of AI involves Agentic AI—systems that make autonomous sequences of decisions. This requires an "AI Delivery Network" that spreads inference across thousands of edge locations. This distributed approach not only boosts performance but also simplifies data governance for the 50% of APAC organizations currently struggling with varying regional regulations.

As inference moves to the edge, security remains paramount. Implementing Zero Trust controls and protecting API pipelines at every site ensures that resilience does not come at the cost of vulnerability. For enterprises in 2025 and beyond, the move to the edge is no longer optional—it is a prerequisite for scalable, cost-effective AI.

Log in

Log in