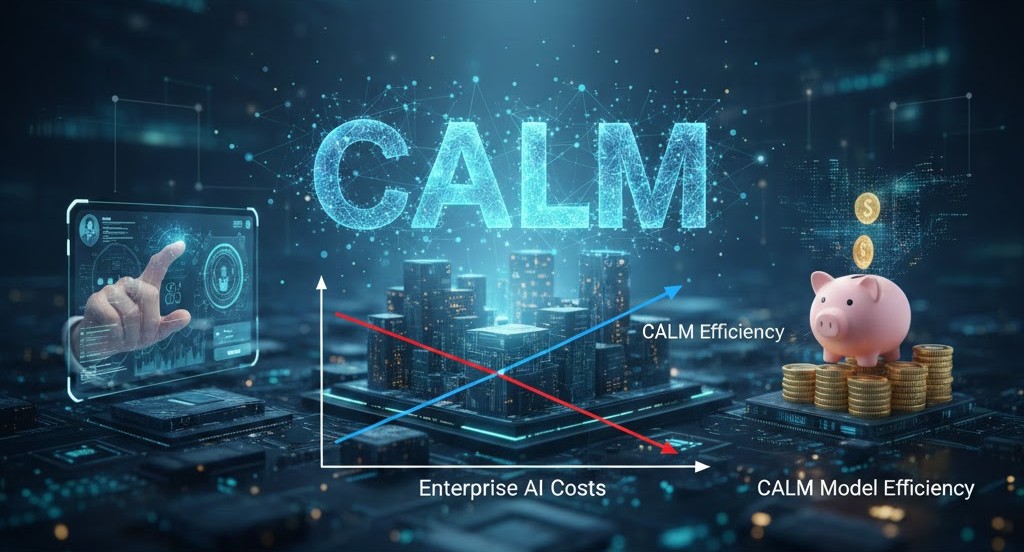

How to Reduce Enterprise AI Costs with CALM Model Design

Enterprise leaders facing skyrocketing expenses for AI deployment may soon find relief. While generative AI offers immense potential, the heavy computational demands for training and inference have created a significant financial and environmental burden. This inefficiency stems from the "fundamental bottleneck" of traditional models that generate text one token at a time.

According to the original report, "Keep CALM: New model design could fix high enterprise AI costs", a breakthrough from Tencent AI and Tsinghua University introduces an alternative architecture designed to streamline high-volume data processing for sectors like financial markets and IoT networks.

Revolutionizing AI Efficiency with CALM

The research presents Continuous Autoregressive Language Models (CALM). This innovative approach re-engineers the generation process by predicting continuous vectors instead of discrete tokens. By using a high-fidelity autoencoder, the system compresses multiple tokens into a single vector with higher semantic bandwidth.

By processing groups of words in a single step rather than sequentially, the model directly slashes the computational load. Key performance highlights include:

- 44% reduction in training FLOPs (Floating Point Operations).

- 34% reduction in inference FLOPs.

- Performance comparable to standard discrete baselines but at significantly lower operational costs.

The New Toolkit for Continuous Vector Space

Shifting from a standard vocabulary to an infinite vector space required the development of a "likelihood-free framework." Since traditional evaluation metrics like Perplexity no longer apply, the team introduced BrierLM—a novel metric that accurately measures model performance without explicit probabilities.

Furthermore, the framework maintains controlled generation, a vital feature for enterprise applications. A new likelihood-free sampling algorithm allows businesses to manage the balance between output accuracy and creative diversity effectively.

Strategic Impact on Enterprise AI Costs

The CALM framework shifts the focus of AI development from simply increasing parameter counts to architectural efficiency. As scaling models reach a point of diminishing returns, the ability to increase semantic bandwidth per generative step becomes a critical competitive advantage.

For tech leaders, the priority is shifting. When evaluating vendor roadmaps, the focus must move beyond model size to architectural sustainability. Reducing the energy and cost per token will enable AI to be deployed more economically across the enterprise, from centralized data centers to data-heavy edge applications.

Related Analysis: Flawed AI benchmarks often put enterprise budgets at risk by masking these underlying architectural inefficiencies.

Log in

Log in