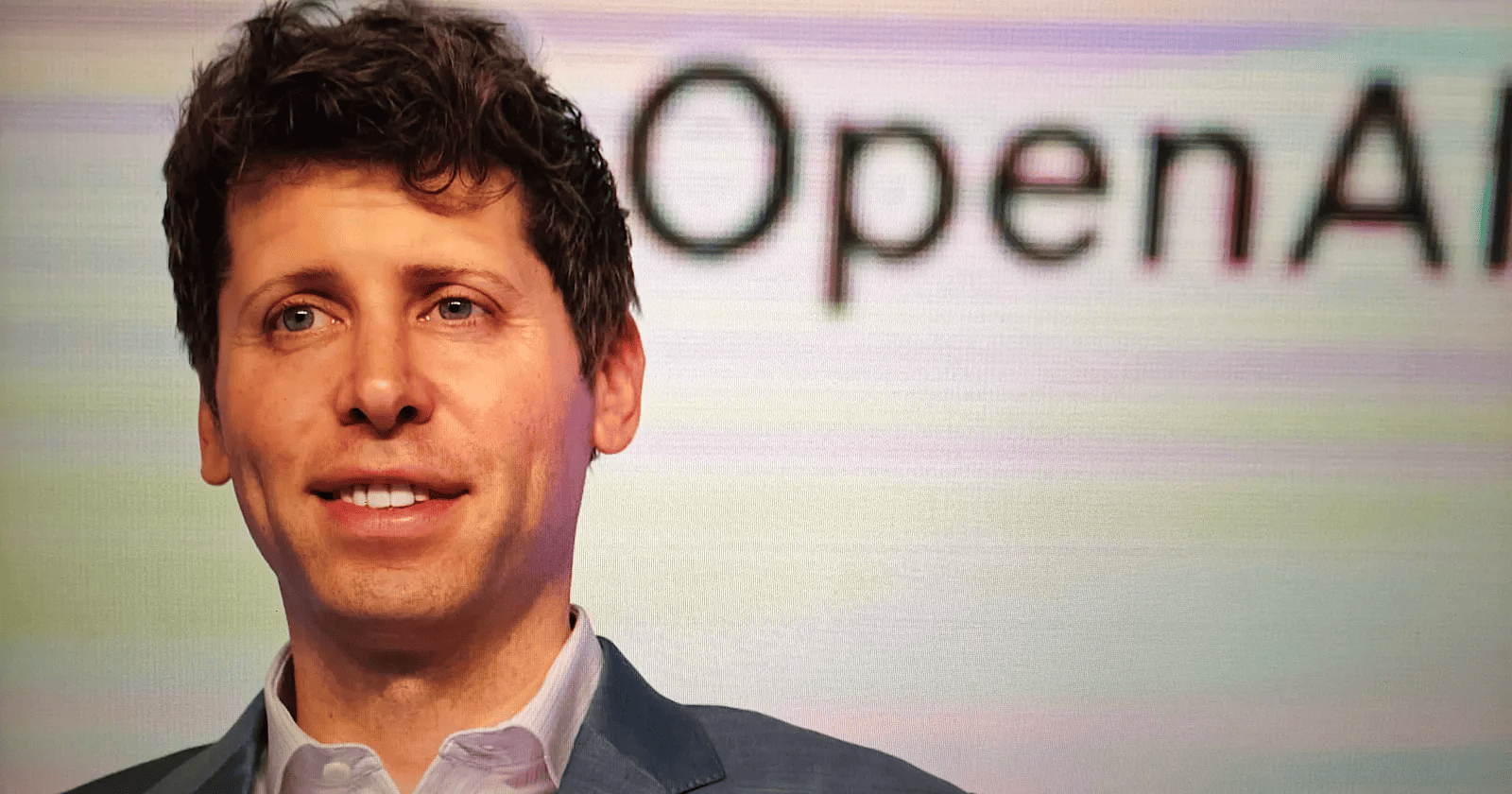

OpenAI Releases Open Weight AI Safety Models for Developers

OpenAI has introduced a significant advancement in AI governance by launching a research preview of its new "safeguard" models. The gpt-oss-safeguard family represents a strategic move toward providing developers with open-weight tools designed specifically for highly customizable content classification and moderation.

The release includes two primary versions: the robust gpt-oss-safeguard-120b and the more compact gpt-oss-safeguard-20b. Released under the Apache 2.0 license, these models empower organizations to modify and deploy safety frameworks tailored to their unique requirements without restrictive licensing hurdles.

Revolutionizing AI Safety with Dynamic Policy Inference

Unlike traditional safety layers that rely on rigid, pre-trained rules, these models leverage advanced reasoning capabilities to interpret developer policies at the point of inference. According to the original report, OpenAI unveils open-weight AI safety models for developers, this allows for a "reasoning-first" approach where the developer maintains full control over the safety framework.

Key Advantages for Developers:

- Enhanced Transparency: By utilizing a chain-of-thought process, the models provide a clear logical path for every classification decision, moving away from "black box" AI systems.

- Operational Agility: Safety policies are not permanently baked into the weights. Developers can update and iterate guidelines instantly without the need for expensive or time-consuming retraining cycles.

Empowering the Open Source Community

This shift moves the industry away from "one-size-fits-all" moderation. Developers utilizing open-source ecosystems can now enforce their own community standards with precision. These models are set to be hosted on the Hugging Face platform, ensuring broad accessibility for the global AI research community.

Related Industry News: OpenAI restructures, enters ‘next chapter’ of Microsoft partnership

Log in

Log in