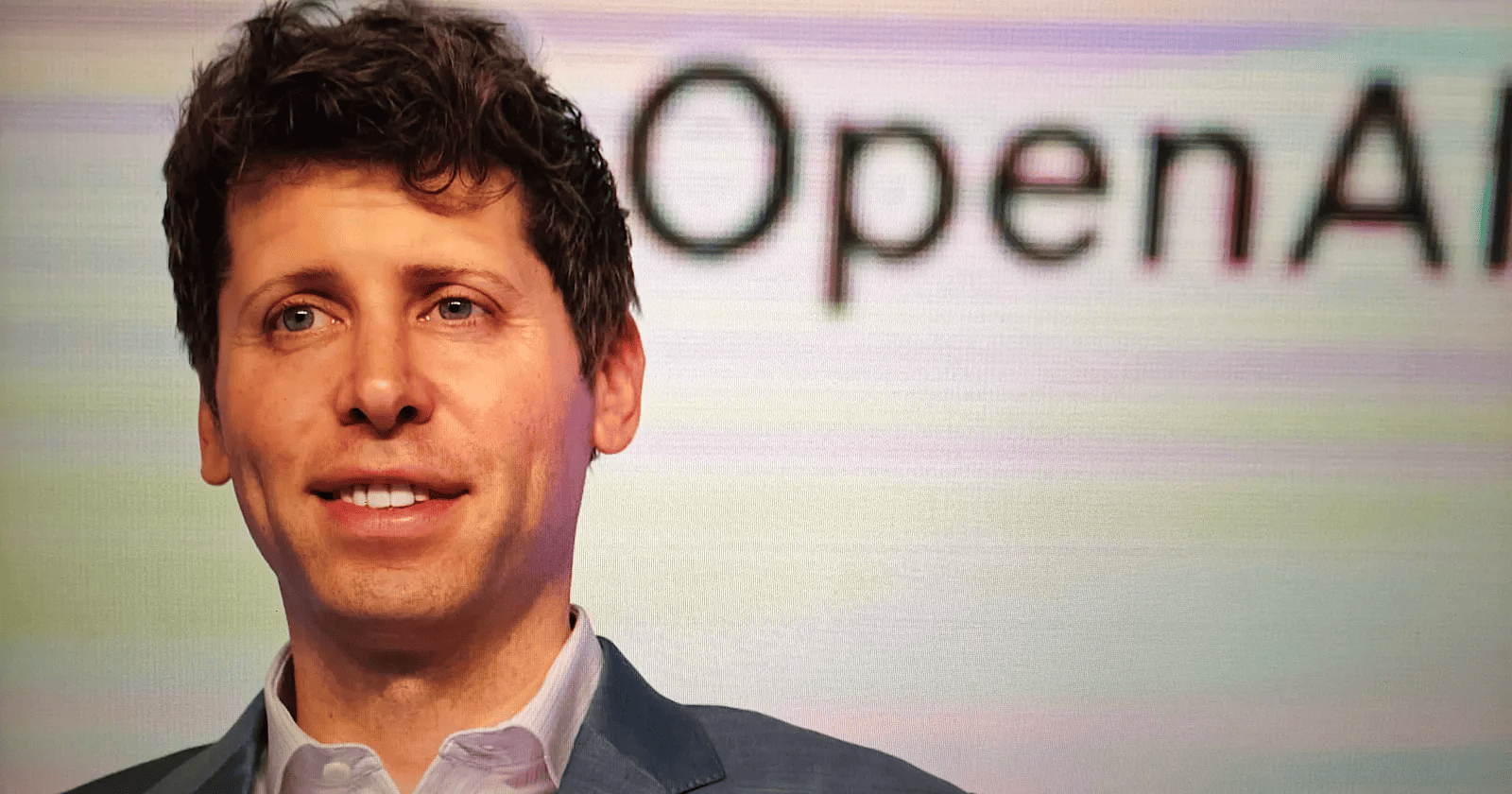

In a candid admission during a recent developer town hall, OpenAI CEO Sam Altman stated that the company "screwed up" the writing quality of its latest model, GPT-5.2. This acknowledgment comes amidst growing user feedback that the model's output feels "unwieldy" and "hard to read" compared to its predecessor, GPT-4.5.

Altman was direct in his response, explaining that the dip in writing proficiency was a result of a strategic trade-off. OpenAI prioritized enhancing the model's capabilities in intelligence, reasoning, coding, and engineering.

A Shift in Focus: From Natural Interaction to Technical Prowess

The contrast between the releases of GPT-4.5 and GPT-5.2 highlights OpenAI's evolving priorities. When GPT-4.5 was introduced in February 2025, the emphasis was heavily placed on natural interaction and writing utility. It was marketed as a tool that "feels more natural" and is ideal for improving written content.

However, the announcement for GPT-5.2 took a different turn. It was positioned as the most capable model series for professional knowledge work, spotlighting its ability to handle complex tasks like creating spreadsheets, building presentations, and writing code. While technical writing was mentioned as an improvement, the broader writing experience clearly took a backseat to these functional capabilities.

The Broader Implications for the AI Industry

This incident sheds light on a critical challenge facing the AI industry: the difficulty of maintaining high performance across all domains simultaneously. As models become more complex, optimizing for logic and reasoning can inadvertently degrade linguistic fluency. This "catastrophic forgetting" or trade-off is a known phenomenon in machine learning, but hearing a CEO like Altman address it so openly is rare.

For businesses and professionals who rely on ChatGPT for drafting emails, creating content, or polishing prose, this serves as a crucial reminder. Model updates are not always linear improvements. Just like software dependencies, a new model version might introduce regressions in specific areas while advancing in others. It underscores the need for continuous testing and validation of AI workflows.

Looking Ahead: The Future of General Purpose Models

Despite the current setback, Altman remains optimistic about the future trajectory of OpenAI's models. He expressed his belief that "the future is mostly going to be about very good general purpose models" and that even models focused on coding should eventually "write well, too."

While no specific timeline was provided for the GPT-5.x updates that will address these writing issues, OpenAI's history of iterative point releases suggests that improvements could be rolled out gradually. Users can expect ongoing adjustments as the company balances the dual demands of technical reasoning and natural language generation.

As the AI landscape continues to evolve, the balance between raw computational power and human-like communication remains a key frontier. OpenAI's transparency in this matter sets a precedent for how tech giants might manage user expectations in the face of complex developmental challenges.

Log in

Log in