const { OpenAI } = require('openai');

const api = new OpenAI({

baseURL: 'https://api.ai.cc/v1',

apiKey: '',

});

const main = async () => {

const result = await api.chat.completions.create({

model: 'alibaba/qwen3-max-instruct',

messages: [

{

role: 'system',

content: 'You are an AI assistant who knows everything.',

},

{

role: 'user',

content: 'Tell me, why is the sky blue?'

}

],

});

const message = result.choices[0].message.content;

console.log(`Assistant: ${message}`);

};

main();

import os

from openai import OpenAI

client = OpenAI(

base_url="https://api.ai.cc/v1",

api_key="",

)

response = client.chat.completions.create(

model="alibaba/qwen3-max-instruct",

messages=[

{

"role": "system",

"content": "You are an AI assistant who knows everything.",

},

{

"role": "user",

"content": "Tell me, why is the sky blue?"

},

],

)

message = response.choices[0].message.content

print(f"Assistant: {message}")

Product Detail

Discover Qwen3-Max Instruct, Alibaba’s groundbreaking large language model (LLM), officially unveiled in early 2025. This flagship AI model boasts over 1 trillion parameters, marking a significant leap in large-scale artificial intelligence. Trained on massive datasets with an advanced architecture, Qwen3-Max Instruct demonstrates exceptional capabilities, particularly in technical, code, and mathematical tasks. This instruction-tuned variant is specifically optimized for fast, direct instruction following, eliminating the need for step-by-step reasoning and delivering rapid, precise responses.

✨ Technical Specifications: Unrivaled Power

- 🚀 Parameter Scale: Over 1 trillion parameters (trillion-level scale)

- 💾 Training Data: 36 trillion tokens of pretraining data

- 🧠 Model Architecture: Mixture of Experts (MoE) transformer with global-batch load balancing for efficiency

- 📚 Context Length: Up to 262,144 tokens (supporting over 258k input + 65k output tokens)

- ⚡ Training Efficiency: 30% MFU improvement over previous generation Qwen 2.5 Max models

- 🗣️ Languages Supported: 100+ languages, with specific enhancements for mixed Chinese-English contexts

- 💡 Inference Mode: Non-thinking mode, prioritizing fast, direct instruction answers (Thinking version in development)

- 🔄 Context Caching: Enables reuse of context keys to significantly improve multi-turn conversation performance

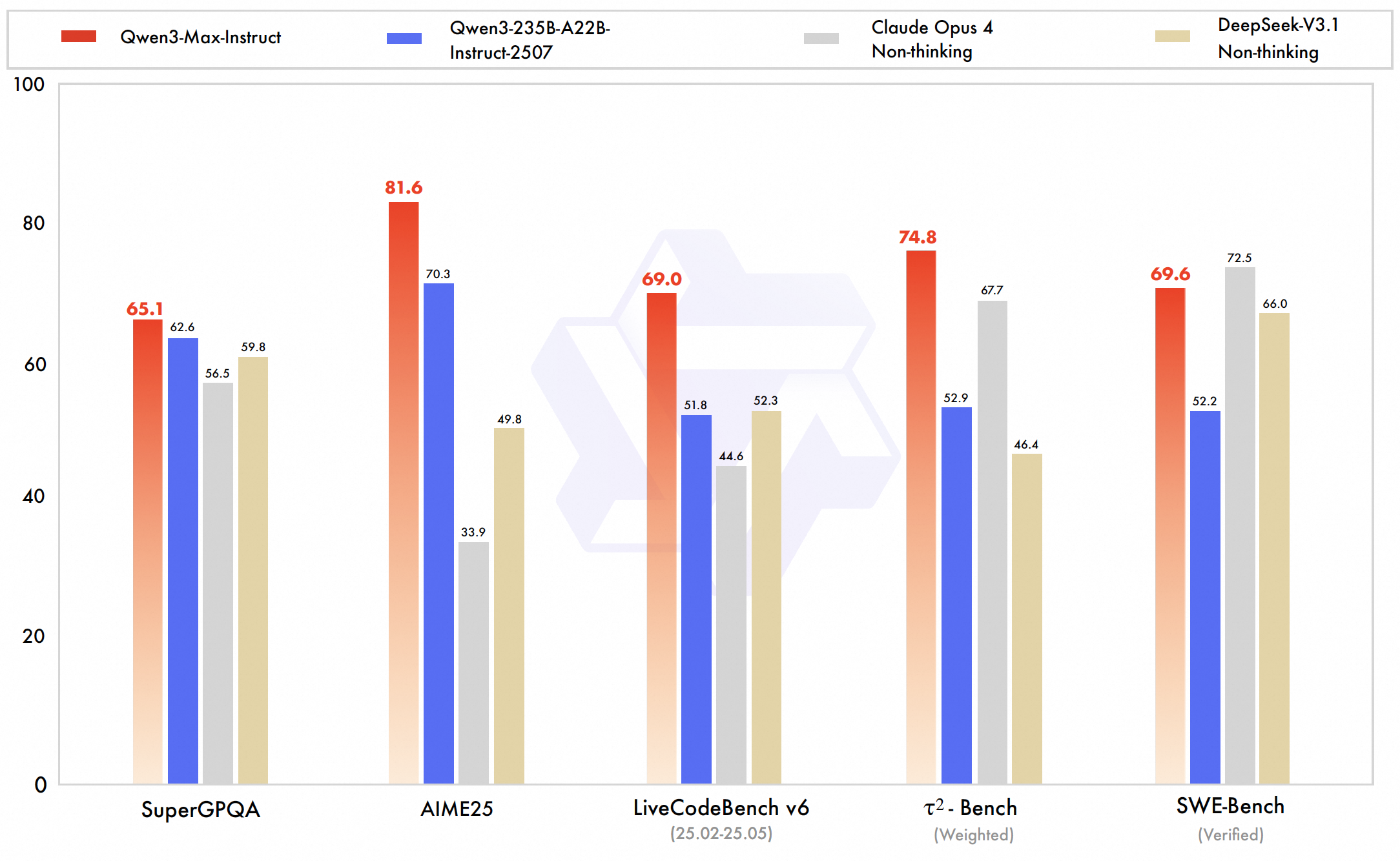

📊 Performance Benchmarks and Highlights: Setting New Standards

Qwen3-Max achieves world-class performance, particularly excelling in code, mathematical reasoning, and technical domains. Alibaba’s internal testing and leaderboard results confirm its superiority or equivalence to top-tier AI models such as GPT-5-Chat, Claude Opus 4, and DeepSeek V3.1 across multiple benchmarks.

- 💻 SWE-Bench Verified: 69.6 (demonstrates strong real-world programming challenge solving)

- 🔬 Tau2-Bench: 74.8 (surpasses Claude Opus 4 and DeepSeek V3.1)

- ❓ SuperGPQA: 81.4 (leading question answering performance)

- ✍️ LiveCodeBench: Excellent real-code challenge results

- 🧮 AIME25 (Mathematical Reasoning): 80.6 (outperforming many competitors)

- 🏆 Arena-Hard v2: 86.1 (strong performance on difficult tasks)

- 🏅 LM Arena Ranking: #6 overall, beating many state-of-the-art models except top conversational models like GPT-4o

💰 API Pricing: Cost-Effective Large-Scale AI

- Input price: $1.26 per million tokens

- Output price: $6.30 per million tokens

💡 Key Use Cases: Powering Enterprise Innovation

- 🏢 Enterprise Applications: Ideal for technical domains requiring large context processing, such as code generation, mathematical modeling, and research assistance.

- 🌍 Multilingual Support: Robust bilingual and international applications with strong Chinese-English mixed-language handling.

- 📜 Huge Context Windows: Enables extremely long document understanding and multi-turn dialogue with persistence.

- 🛠️ Tool Use Ready: Optimized for retrieval-augmented generation and integration with external tools.

- 🚀 Fast Responses: Prioritizes quick instruction execution without chain-of-thought overhead.

- 🔗 Ecosystem Integration: Part of Alibaba’s Qwen3 family, including vision and reasoning variants (Qwen-VL-Max and Qwen3-Max-Thinking).

💻 Code Sample: Getting Started

import openai

client = openai.OpenAI(

base_url="https://api.ai.cc/v1",

api_key="YOUR_API_KEY",

)

chat_completion = client.chat.completions.create(

model="alibaba/qwen3-max-instruct",

messages=[

{

"role": "system",

"content": "You are a helpful AI assistant.",

},

{

"role": "user",

"content": "Explain the concept of quantum entanglement in simple terms.",

},

],

max_tokens=500,

temperature=0.7,

)

print(chat_completion.choices[0].message.content) 🆚 Comparison With Other Leading Models

vs GPT-5-Chat: Qwen3-Max Instruct takes the lead in coding benchmarks and agent capabilities, showcasing strong performance on complex software engineering tasks. GPT-5-Chat, however, benefits from a more mature ecosystem with broader multimodal features and wider commercial integrations. Notably, Qwen offers a much larger context window (~262k tokens) compared to GPT-5’s ~100k tokens.

vs Claude Opus 4: Qwen3-Max surpasses Claude Opus 4 in both agent and coding performance benchmarks, while also supporting a significantly larger context size. Claude excels in long-duration agent workflows and safety-focused behaviors, making it a strong contender in specific areas. Both models are closely matched in overall performance, though Claude retains an edge in conservative code editing tasks.

vs DeepSeek V3.1: Qwen3-Max outperforms DeepSeek V3.1 on key agent benchmarks like Tau2-Bench and various coding challenges, demonstrating stronger reasoning and tool-use abilities. While DeepSeek supports multimodal inputs, it falls behind Qwen in extended context processing. Qwen’s advanced training and scaling innovations confirm its leadership in large-scale and complex tasks.

🔌 API Integration: Seamless Access

Qwen3-Max Instruct is readily accessible via the AI/ML API. Comprehensive documentation is available here for developers seeking seamless integration.

❓ Frequently Asked Questions (FAQ)

Q: What architectural advancements distinguish Qwen3-Max Instruct's instruction-following capabilities?

A: Qwen3-Max Instruct employs a revolutionary instruction-tuning framework combining supervised fine-tuning with reinforcement learning from human feedback at an unprecedented scale. Its architecture features multi-granular instruction understanding, parsing complex directives with nuanced constraints and conditional logic. Advanced attention mechanisms dynamically weight instruction components, while specialized reasoning pathways activate based on instruction type, ensuring precise adherence to user intent.

Q: How does Qwen3-Max Instruct achieve its breakthrough performance on complex, multi-modal instructions?

A: The model integrates cross-modal instruction processing, understanding and executing directives across text, code, mathematical notation, and conceptual diagrams through unified representation learning. It employs hierarchical instruction decomposition, breaking down complex requests into executable sub-tasks with dependency tracking. Advanced constraint satisfaction algorithms ensure all requirements are met, while dynamic style adaptation matches requested tones and formats.

Q: What specialized instruction-following capabilities make this model exceptional for enterprise applications?

A: Qwen3-Max Instruct offers enterprise-grade instruction capabilities, including precise adherence to business formatting standards, consistent application of brand voice guidelines, accurate execution of technical specifications, and reliable compliance with regulatory requirements. It excels at processing industry-specific instructions with domain-aware interpretation, maintaining contextual awareness across extended sequences, and providing transparent execution traces for verification.

Q: How does the model handle ambiguous or conflicting instructions while maintaining usefulness?

A: The architecture incorporates sophisticated instruction resolution mechanisms that identify ambiguities, potential conflicts, and underspecified elements through probabilistic reasoning and context analysis. When facing ambiguous instructions, the model employs clarification protocols, suggesting interpretations while maintaining flexibility. For conflicting directives, it implements priority-based resolution using learned hierarchies of user intent, ensuring both instruction fidelity and practical utility.

Q: What safety and alignment features ensure responsible instruction execution?

A: Qwen3-Max Instruct incorporates multi-layered safety verification, evaluating instructions against ethical guidelines and potential harms before execution. The model features instruction sanitization to neutralize harmful reinterpretations, value-preserving execution that maintains ethical constraints, and transparent reasoning about safety-related decisions. These safeguards ensure the model remains helpful, harmless, and honest while executing complex, open-ended instructions.

AI Playground

Log in

Log in