const { OpenAI } = require('openai');

const api = new OpenAI({

baseURL: 'https://api.ai.cc/v1',

apiKey: '',

});

const main = async () => {

const result = await api.chat.completions.create({

model: 'deepseek/deepseek-thinking-v3.2-exp',

messages: [

{

role: 'system',

content: 'You are an AI assistant who knows everything.',

},

{

role: 'user',

content: 'Tell me, why is the sky blue?'

}

],

});

const message = result.choices[0].message.content;

console.log(`Assistant: ${message}`);

};

main();

import os

from openai import OpenAI

client = OpenAI(

base_url="https://api.ai.cc/v1",

api_key="",

)

response = client.chat.completions.create(

model="deepseek/deepseek-thinking-v3.2-exp",

messages=[

{

"role": "system",

"content": "You are an AI assistant who knows everything.",

},

{

"role": "user",

"content": "Tell me, why is the sky blue?"

},

],

)

message = response.choices[0].message.content

print(f"Assistant: {message}")

Product Detail

DeepSeek V3.2 Exp Thinking is an advanced hybrid reasoning AI model meticulously engineered to enhance multi-step, complex reasoning and deep cognitive processing tasks. Building upon the V3.1 series, this iteration significantly boosts "thinking" mode performance, delivering superior contextual understanding and dynamic problem-solving capabilities. It excels in demanding fields such as software development, research, and knowledge-intensive industries. Designed for enterprise-grade deployment and research workflows, DeepSeek V3.2 Exp Thinking features optimized token handling, faster inference, and richer multimodal data interpretation, all supporting robust, stepwise thought processes.

✨ Key Innovations & Architecture

DeepSeek V3.2 Exp Thinking stands out with several core innovations designed for efficiency and enhanced reasoning.

- ⚙️ Architecture: Transformer-based model integrated with DeepSeek Sparse Attention (DSA) for intelligent, selective token attention.

- 💡 Parameters: Utilizes 671 billion total parameters, with a highly efficient 37 billion active during inference.

- 📏 Context Window: A massive context window supporting up to 128K tokens, ideal for extensive document analysis.

- ✨ Sparse Attention (DSA): Focuses on selecting only the most relevant tokens, dramatically reducing computational load from quadratic to near-linear scaling with context length.

- 🧠 Thinking Mode: Activates explicit Chain-of-Thought generation prior to answers, enhancing transparency and complex problem-solving.

- ⚡ Training Efficiency: Achieves a similar training regime as V3.1-Terminus but with reduced computational cost due to DSA.

🚀 Performance & Benchmarks

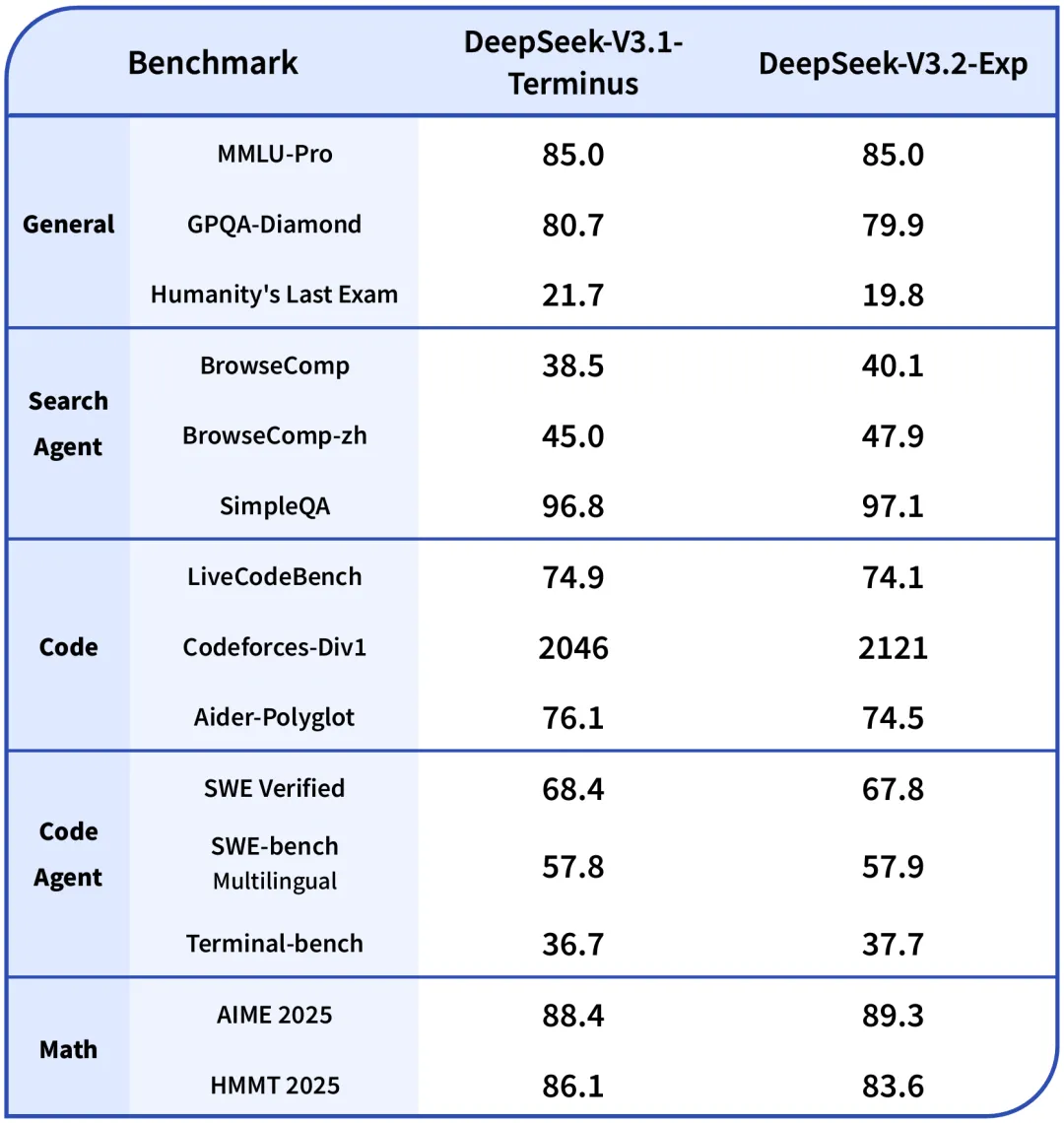

Overall, DeepSeek V3.2 Exp Thinking maintains performance on par with V3.1-Terminus in intricate reasoning tasks. Slight variations are observed across specific benchmarks, with particular strengths in mathematics contests such as AIME 2025 and programming challenges (Codeforces).

Performance Benchmarks for DeepSeek V3.2 Exp Thinking

💡 Advanced Features

- Chain-of-Thought Reasoning: Generates explicit intermediate reasoning steps before final answers, significantly enhancing transparency and complex problem-solving capabilities.

- DeepSeek Sparse Attention (DSA): Enables fine-grained token selection for long contexts, reducing compute costs while preserving high output quality.

- Large Context Window: Supports up to 128K tokens, making it highly suitable for multi-document workflows and deep knowledge integration.

- Streaming Support: Facilitates streaming of both reasoning content and final outputs for real-time, interactive experiences.

🎯 Practical Use Cases

- ✔️ Complex reasoning tasks demanding stepwise deduction, such as advanced mathematical problem-solving and logical puzzles.

- ✔️ Document analysis and summarization where extensive context windows and structured reasoning are paramount.

- ✔️ Conversational agents requiring explicit reasoning transparency for increased trust and explainability.

- ✔️ Knowledge-heavy applications involving multiple linked documents or extensive logs.

- ✔️ Tool-augmented AI agents where integrating chain-of-thought and function calls improves task control and efficacy.

💰 API Pricing

- 1M input tokens (CACHE HIT): $0.0294

- 1M input tokens (CACHE MISS): $0.294

- 1M output tokens: $0.441

📊 Model Comparison

Vs. DeepSeek-V3.1-Terminus

DeepSeek V3.2 Exp Thinking employs sparse attention to significantly reduce computational overhead while maintaining near-identical output quality to V3.1-Terminus. A key differentiator is V3.2-Exp's dedicated "Thinking mode," which explicitly exposes chain-of-thought reasoning, a feature absent in V3.1.

Vs. OpenAI GPT-4o

While GPT-4o delivers high-quality responses, its processing for very long contexts can be costly. DeepSeek V3.2 Exp Thinking scales efficiently up to 128K tokens, leveraging sparse attention for faster long-context reasoning, whereas GPT-4o primarily relies on dense attention. GPT-4o boasts broader multimodal support, but DeepSeek focuses on optimized textual reasoning transparency.

Vs. Qwen-3

Both models support large contexts. However, DeepSeek's sparse attention critically reduces computational costs for extended inputs. DeepSeek V3.2 Exp Thinking also offers explicit chain-of-thought in its Thinking mode, while Qwen-3 generally emphasizes broader multimodal capabilities.

❓ Frequently Asked Questions (FAQ)

Q1: What is DeepSeek V3.2 Exp Thinking and how does it enhance AI reasoning?

A1: DeepSeek V3.2 Exp Thinking is a specialized AI model designed for complex reasoning tasks that explicitly shows its thought process. It uses systematic chain-of-thought reasoning, breaking down problems step by step to enhance accuracy, provide transparency, and better handle multi-step logical problems.

Q2: What are the primary benefits of DeepSeek's "Thinking Mode"?

A2: The "Thinking Mode" offers higher accuracy on complex tasks, transparent problem-solving, improved performance in mathematical and logical challenges, enhanced educational value by showcasing reasoning, and the ability to detect and correct errors mid-reasoning. This makes it ideal for applications requiring reliability and explainability.

Q3: For what types of tasks is DeepSeek V3.2 Exp Thinking best suited?

A3: It's ideal for complex mathematical problem-solving, scientific reasoning, logical puzzles, strategic planning, code debugging, legal/ethical reasoning, and research synthesis – essentially any scenario where understanding the reasoning process is as crucial as the final answer.

Q4: How does DeepSeek Sparse Attention (DSA) benefit the model?

A4: DSA allows the model to selectively focus on the most relevant tokens in long contexts, significantly reducing computational costs and memory usage from quadratic to near-linear scaling, all while maintaining high output quality. This enables efficient processing of larger context windows.

Q5: Can DeepSeek V3.2 Exp Thinking handle extensive documents and multi-document workflows?

A5: Yes, with its large context window supporting up to 128K tokens, DeepSeek V3.2 Exp Thinking is exceptionally well-suited for comprehensive document analysis, summarization, and workflows that involve integrating information from multiple linked documents or extensive log files.

AI Playground

Log in

Log in